I don’t want to be the type of tester who brings a developer a bug and proclaims “It’s broken!” with nothing more to add. This leads to frustrated developers and the perception that testers only deliver bad news. Because I am the testing specialist on my team, it’s my job to bring issues of quality to the attention my team members. How I present those issues shapes how I’m viewed by my fellow team members. I like to provide as much information as I can in my bug reports because it’s less work for the developer to do when they go to fix the bug. A bug can occur anywhere between when a user inputs text on a form through to the database behind a server. The window of failure is the components of your system under test that you haven’t ruled out as possibly containing the bug. The more I can do to narrow down the window of failure, the more valuable my bug reports are and the faster a developer can commit a fix.

Let’s imagine a situation in which a form is not saving data because a required field is not being sent to the server because a bug exists in the data binding between the HTML and javascript. These are all possible ways to explain the issue to a developer:

- The form is broken

- This provides the developer little information about the actual problem and the failure can be occurring anywhere from the client to the server. I cannot rule out any piece of the system.

- The form breaks when I press the save button

- This gives some steps to reproduce which helps the developer reliably reproduce the issue on their local environment at least, but I still cannot rule out any piece of the system.

- The form is broken because there is an error in the javascript console

- This will likely provide the developer with a stack-trace to help narrow down the window of failure, but the onus is on the developer to narrow down where the issue is occurring.

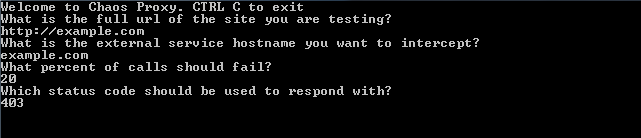

- The data is not saving because a 400 Bad Request error is being returned from the server

- This information will narrow the window of failure considerably in that we know that the data being sent to the server is not formatted correctly when coming from the client. From this information, I can safely assume that the bug is not in the server-side code or issues with the database.

- The javascript is throwing an error about a property being undefined on the model

- What this tells me is that the error is most likely in the binding between the HTML and javascript and the window of failure is narrowed to the client HTML/Javascript interaction. This shows an understanding of how the application is written and provides the developer with a very narrow window of failure letting them focus on what the eventual fix is.

At times, the most difficult part of fixing a bug is understanding where the application is broken. Once it’s understood where the break is, it could be as easy as a one line change that fixes the issue. Getting better at narrowing down the bugs window of failure allows the developer to focus on the fix.

Where this can get you into trouble is spending too much time trying to narrow the window of failure. We all like to solve puzzles, but sometimes it’s best left to those who are best positioned to solve them. More often than not, the very best person to find the cause of a bug is the person who wrote the code. If I can spend a reasonable amount of time narrowing down the window of failure even just a little, it’s worth the time. Otherwise, my time would be better spent doing more testing. There are a lot of times where I have no idea what is causing a defect, but I do my best to provide as much information as I can to help others reproduce the issue. It’s all about finding a balance between testing and providing the most valuable bug report.

If I’ve done all this work finding where the break in the application is, why don’t I just fix the bug? Often times I will, but I’ll leave that to a different post.